Rhythmic Considerations

There's a simplicity about rhythm in a line of monophonic music. There's no "up or down" in contour, no loud or soft, no infinitesimal shades of timbre. Discounting articulation and note length, a note simply attacks or doesn't. There is a one-dimensional interonset interval between one attack and the next.

The complicated and expressive thing about rhythm is its context, both within expectation and familiarity. Certain genres are instantly recognizable by distinctive rhythms. What makes a rhythm make sense in the context of other rhythms? In a single line of music, rhythm happens linearly, and our ears can definitely pick out things that aren't "grammatical" to it.

This makes sense:

from Rimsky-Korsakov's Procession of the Nobles

But this doesn't:

Nor does this:

In the first example, the quintuplet that finishes the phrase is wildly out of place among the regular divisions of the beat that precede it, though it preserves its melody. In the second example, the available note durations are preserved, but beginning in the second measure, there seems to be no rules governing the progression of one kind of note value to another. Of course, in a larger context, these abnormalities could be points of articulation or recurring motives. But in general, the language of rhythm in a piece depends on a limited number of note values, and a limited range of movement between those values.

The first rhythm module I've built for JALG uses the highly flexible [prob] object to make decisions about rhythm. [Prob] creates weighted probability tables that move from one numbered "stage" to another when they receive an impulse. It takes messages in groups of three integers: stage 1, stage 2, weight. The "weight" is not a percentage; rather, it is divided by the total number of probability values for a stage. For example, the full probability table:

1:1 25

1:2 25

means that there is an equal chance of stage 1 repeating or going from stage 1 to stage 2.

So [prob] is quite flexible and values can easily be changed within each stage.

Each one of these stages corresponds to a particular rhythmic value. Max allows the [metro] object to be tempo-synced, outputting an impulse to a globally-synced tempo. By defining the possible progressions of each stage, I can create a grammar for the rhythm modules to follow.

How do I populate the list of rhythms? I could do it more or less randomly (how I've been operating up until now), or create a set of templates for rhythm. I'm going to make my first ones based on musical rhythms that I like from other composers. Here's a sample process for creating a probability table for a specific rhythm.

Steve Reich's Clapping Music...

I'm going to choose Steve Reich's iconic Clapping Music, famously built off of one rhythm (in a bout of characteristic nerdiness, I've also made this rhythm my custom vibration pattern for phone calls).

...with only interonset values.

Though this passage notated with rests, it makes more sense to the rhythm module to treat an eighth note (8n) +rest as quarter note (4n), with only interonset values. Pretty low rhythmic variety.

With the quarter note as the beat, there are eight distinct attacks. It's important to consider what I choose as the "controlling metronome," that is, a metronome synced with the global tempo that forces the [prob] to choose the next stage for the note impulse. If this value is set at (4n), a new note value can only be chosen every (4n), for example. I'll want to assign it to (8n), eighth notes, in this case.

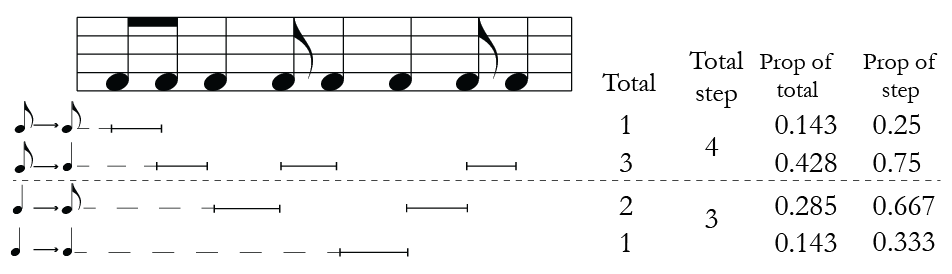

The possible rhythm transition values are shown to the right.This corresponds to a permutation of 2 rhythmic values, taken 2 at a time, with repetition.

(2 * 2 = 4 possible transitions)

How does this manifest in the rhythmic pattern?

Since each step moves linearly, I looked at the proportion of the step from one to another and the proportion of each possible path a particular pnote (sic) could take.

Every possible ordering is represented in the music, though this wouldn't necessarily be the case as we'll soon see. It's three times more likely for (8n) to proceed to a quarter note than for (8n) to repeat. But it is equally likely for either (8n) or (4n) to repeat. Taking this rhythmic template, I ran the module for 8 bars of 4/4:

The breakdown of note transitions is shown to the right. The model rhythm doesn't happen to be duplicated in these results.

Hmm, it doesn't quite match the distribution seen in Clapping Music, especially because of the subsequent strings of continuous eighth and quarter notes. This is to be expected from such a small sample size. f I were to let the module run for even a few minutes, the distribution would probably look much closer to its model rhythm. But even its first iteration is recognizably Reichian! (-ien?) I find myself craving the first or second half of the cell when its counterpart appears in the output.

Ok, how about for a slightly more complicated rhythm? Let's take a tiny bit of a great melody from Nikolai Rimsky-Korsakov's Procession of the Nobles:

There is a greater variety of rhythm values in this excerpt: quarter notes (4n), eighth notes (8n), dotted eighth notes (8nd), and sixteenth notes (16n). In the above example I eliminated all the rhythm progressions that didn't occur to saves space (though there are 4*4=16 possible progressions) Some notes about this set:

- (8n) is the most promiscuous of the note values, pulled by the gravity of any other note value. This makes sense given the fact they lay somewhat in the middle the other possible durational values. In this music, notes never more than double or halve in durational value from one to the next, so the (8n) is a bridge between them.

- (8nd) are always followed by (16n), and (4n) are always followed by (8nd). The first of these rules is natural to the rhythmic language of the piece from which it is excerpted – syncopation never happens at so fine a rhythmic level as (16n) in this piece. Otherwise, the stately and steady meter could become disrupted very easily. The second is probably a function of how short an excerpt I've taken, though Rimsky-Korsakov DOES save the quarter notes for the gravitational pull of every sixth beat.

- Here's a few bars of running the Processions blueprint through the rhythm module:

And a break down of note...processions.

Still widely variant in a short excerpt, but much more consistent within steps. The sixteenth note module is almost exactly the same as in the model rhythm. It's kind of hard to compare the others quantitatively, because not every note combination occurred. Perhaps I should have waited for an excerpt that also created (4n), but this omission highlights the quarter note's plight: it can only be approached from (8n). So they only have an 8% chance of ever occurring. The output of this module doesn't recall the model at all, but at least the rhythms that are coming out of it would be grammatical to Rimsky-Korsakov.

And now because I just *have* to do it: a combination of both probability tables.

Procession of the Clapping Music

Now, I realize this data isn't normalized: there are a lot more rules in Procession's blueprint than in Clapping Music's, and so it has undue influence on the total weights for each stage. This will be one thing to account for in future attempts, a concept I need to consider very regularly as I enhance its capabilities.

Tuplets - Max can handle triplet divisions but nothing else. I'll have to build a separate tuplet module that converts beat/tempo information into milliseconds so that beats/half-beats can be evenly divided into groups of 5, 7, 9, etc.

Polyrhythm - I am going to have to figure out how to configure rhythm control modules so they can reference divisions internally but still remain synced to a global clock. I could get some very cool polyrhythmic content out of this with very strange divisions if I could run parallel but referenced rhythm modules.

Phrasing - I really don't want just an uninterrupted stream of notes. Though figuring out the possibilities of one rhythmic value moving to another is important, I need to extend the profile more than one note: instead, longer strings of notes, like words into phrases, become probabilistically triggered. Perhaps this module could average and learn from its own output: the primacy of the first interpretation populates its future function, birthed and spun out in one direction by chance.

Here's what one of these rhythm control modules looks like so far:

I imagine it's going to take up a little more real estate on the screen soon.